15 minutes

Home Network, December 2023

So, I have a bit of a problem. I keep over-engineering my home network. Like, a lot. I gave some background in 2022, just before I started another round of changes, so I figure it’s time to update things a bit and explain a few of my new favorite things.

Aside: every time networking comes up, a former co-worker of mine will ask me “why do you keep doing that to yourself?” Then I kind of mumble something about servers, and multiple people working/schooling from home, and giant collections of media files (photography, videography, and years of collecting blu-rays).

But the real reason is that I enjoy it, and I really hate dealing with “regular” home networking issues. You know, the router needs rebooted before anything will connect, or there’s no connectivity in random corners of the house, or family saying “things don’t work right”, but with no data and nothing to look at to get more detail. I like redundancy and monitoring (they’re occupational hazards), and neither of those are really things that most home networking gear are good at.

So I’m mostly using 1-2 generation old enterprise hardware. It’s mostly bulletproof and it tends to sell for a few percent of its new price after it goes off of lease and ends up on eBay.

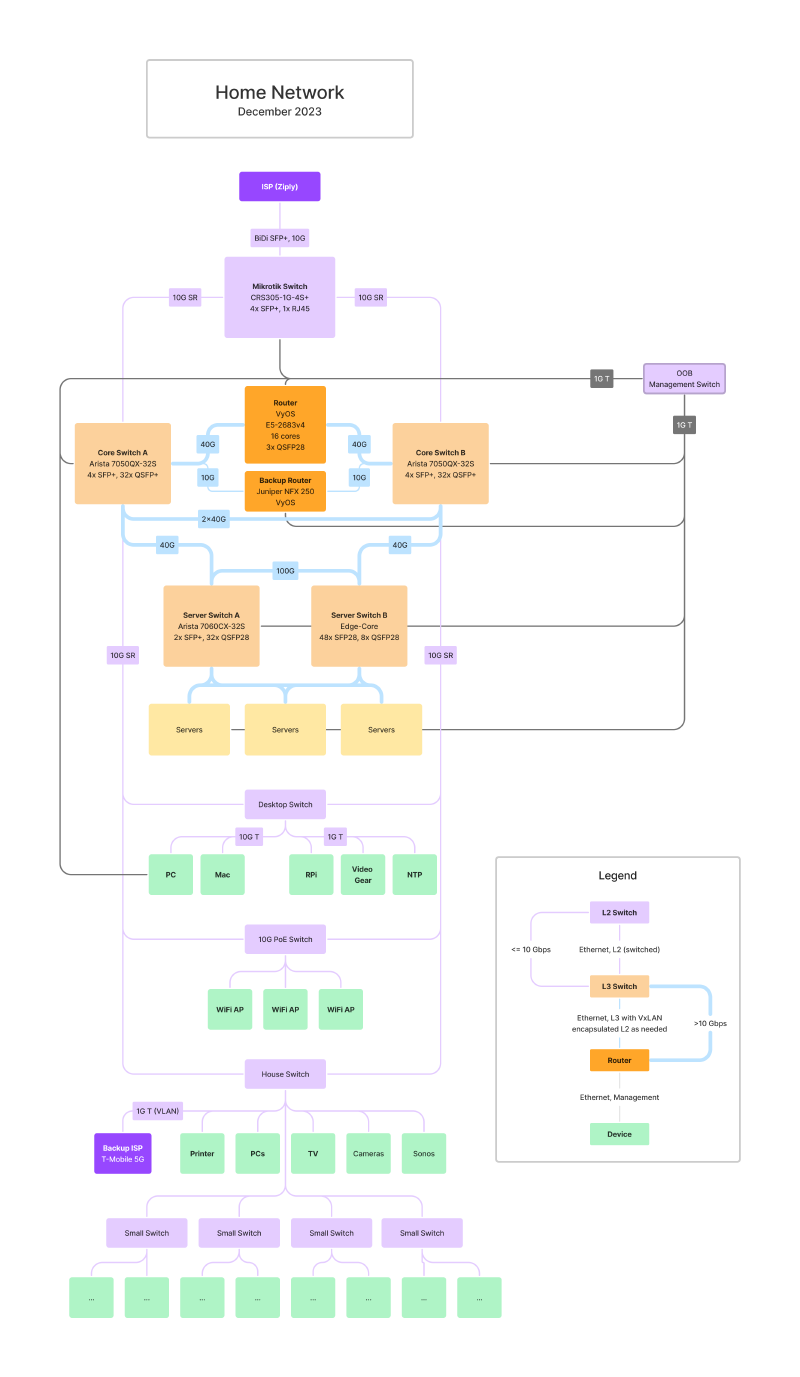

Here’s the current design, which differs quite a bit from where it was 18 months ago:

The big changes over the past 18 months:

- I ripped out almost all of the L2 Ethernet switches that comprised the “house” side of my network (as opposed to the “server” side) and dropped a pair of Arista 7050QX-32S switches in instead. The 7050QX is a 32-port 40 GbE switch, which gives me a lot more room than the 8-port 10G switch that used to sit in the middle of things and is fantastically more capable.

- I replaced the mess of L2 10G links between random switches with 2x10G links back to the pair of Arista switches, and moved to using EVPN-VxLAN for connecting L2 network segments together. EVPN-VxLAN is effectively a way of carrying VLANs over UDP, all controlled by BGP.

- I somewhat reluctantly switched back to using VyOS on a PC instead of using Juniper NFX250s for routing.

- I upgraded my home Internet service, now I have 10G to Ziply, up from 1G before. This came with a few interesting side benefits.

Arista Switches

I was having terrible problems with Spanning Tree during 2021 and 2022. In theory, STP’s job is to manage your L2 network’s topology to eliminate loops. Ethernet doesn’t have any sort of time-to-live on its frames, so loops will make packets circle forever, breaking things. There were 2 big issues:

- I had devices with 3 different flavors of STP in my network, all somewhat incompatible. The Arista 7060CX and Unifi switches all spoke relatively standard modern STP, including RSTP, and worked fine when left by themselves. Unfortunately, Linux’s STP implementation didn’t really play well with them, and it didn’t always agree about which ports should be active and which shouldn’t be. Since my old router was connected to 3 different L2 segments and bridged them together, and the Edge-Core switch also used Linux’s STP implementation, this was problematic. Worse, my Sonos speakers also sometimes participated in STP, and spoke a really really ancient version of STP (802.1D-1998) that uses ancient metrics for link speeds. Mixing the 1998 version and modern STP means that devices have wildly different ideas of what speed various links are; a 1998 device will assign weights to a 10 Mbps link that claims that it’s faster than a 100 Gbps link on a modern device. The upshot of this is that any network change ran a high risk of causing a loop somewhere and breaking things. Sometimes the router would isolate itself (oops), and sometimes it would bridge two segments together that were already connected via another path (also oops). Sometimes Sonos would just manage to loop a heavily-filtered version of my network through its network, doing fun things like causing all of the iPads in the house to crash (!). In short, it just wasn’t reliable.

- Even when it worked perfectly, STP only works by eliminating redundancy. It finds every link that isn’t needed and shuts it down until the network topology changes and the redundant link is useful. Adding more links doesn’t add performance, it just adds redundancy. And complexity, and (eventually, for me) outages.

I spent a lot of time dealing with outages caused by loops. It was terrible. Nearly as bad, I wanted >10 Gbps between my desktop and my file server (for working on large video files), and the only good way to do that was to run a fiber strand directly between the two. Since they’re in opposite ends of the house, that wasn’t really something that I wanted to do.

So, in short, what I had didn’t work reliably, it was too slow, and there was no small change that would fix it.

After considering a bunch of options, I considered picking up a pair of used Arista 7060CX switches, like the one that I already had and really liked, but their price had jumped 6x since I’d bought the one that I had (pandemic!), and that just wasn’t an option. Instead, I bought a pair of older 7050QX switches. They were 40G switches instead of 100G, but were otherwise fairly similar. 1U, 32-ish ports, L3, etc. All Arista switches run the same OS image, which makes managing things much less painful than Juniper, where even minor device differences tend to need distinct OS builds.

My goal was to rip out as much of the L2 network as possible, because it caused me so many problems. Obviously, it’s not practical to give each device its own L3 network (so many desktop things want to use broadcast to find peers), but I wanted to eliminate any notion of a L2 “backbone”.

So, the plan was to attach each “good” L2 switch to the pair of Arista 7050QXs using some flavor of link aggregation, and then cascade all of the “less good” (8 port, etc) switches downstream from the “good” switches. In general, though, each “good” switch island was only going to be connected via the Arista switches. There wouldn’t be any connections between them.

Next, the Arista switches only talk L3 to other L3 devices. That includes each other as well as all of the server switches and servers. Before, I’d ended up running L2 links (switched/bridged/etc) and L3 links (routed) between pairs of L2+L3 devices, and that burned a lot of ports.

Finally, I extended the L2 networks over L3 using EVPN-VxLAN. This is one of those new (ish) things that is increasingly common in cloud environments, but really should be getting more attention elsewhere. It’s a standardized way of (a) shipping L2 Ethernet frames over IP packets and (b) providing a distributed MAC mapping table for VxLAN using BGP (that’s the “EVPN” part of the name). Running L2 Ethernet networks on top of a L3 IP network on top of an L2 Ethernet network seems weird, but there are some very real advantages to be had. Mostly, it lets you entirely get rid of Spanning Tree. You build an L3 backbone for your network, using whatever design works best for you. Then you connect VxLAN-aware devices directly using the L3 backbone, and use VxLAN-aware switches to connect L2 segments together. Most newer L3 switches can talk EVPN-VxLAN just fine. Linux (with FRR) can as well.

The really nice bit of this (from my perspective) is that you can add redundancy and capacity however you want, by adding additional L3 links between devices. We have zillions of tools for this; any dynamic routing protocol (OSPF, for instance) knows how to handle it in an utterly standard way. And because the MAC->port mapping is global and distributed via BGP, it’s more or less impossible to get loops at the VxLAN level. Using BGP for L2 traffic is weird, but we know how to scale BGP; BGP devices with fewer than 10 million routes are weird these days. Adding a few hundred (or thousand, or a few hundred thousand) MAC entries isn’t a big deal. You just need enough RAM.

Even better, it totally decouples logical networks and physical networks. If I want a fast L2 connection between my desktop (in the basement) and my file server (in the garage), I don’t need to run a fiber between the two, I just need to have enough bandwidth in the middle and then configure VxLAN on switches to drop the two onto the same L2 network. Then they can see each other using the usual L2 browsing tools and everything works.

The only real downsides are (a) a bit of extra packet overhead (which is fine in this case, I use 9000 byte MTUs on L3 links), (b) a bit of added complexity (which is balanced by removing STP and cross-vendor VxLAN support), and (c) not everything that should support EVPN-VxLAN does today. I really wish my WiFi APs could do VxLAN EVPN, but almost no AP do today. They’d have to be able to do OSPF and BGP, but that’s not really a giant hurdle for modern CPUs, even low powered ones.

For the most part, I’m letting my L3 switches handle all of the routing. They act as the default gateway on my “normal” home VLAN. The exceptions are security-related networks. I have a guest WiFi VLAN and an untrusted IOT VLAN, and my router/firewall handles routing for those. This leaves everything as fast and simple as possible.

Back to VyOS for Routing

For about a year, I was using Juniper NFX250s as routers. Unfortunately, I’ve had too many issues with them and recently decided to go back to VyOS.

In no particular order:

- Juniper’s documentation for NFXs is really sparse. You can usually follow their documentation for SRX firewall devices, and it mostly works, but only mostly. Over time, Juniper’s product lines have diverged, and some features work differently on small SRXes, large SRXes, MX routers, older EX switches, newer EX switches, and QFX switches. Good luck figuring out which config flavor the NFX wants, or even if it’s supported at all.

- Juniper doesn’t support VxLAN on NFXes at all. Some of the config syntax is there, but it doesn’t work. Reading between the lines, even SRXes won’t do what I want, and the NFXes can’t even do that much. In order to get a Juniper device to route and do NAT between L3 and VxLAN virtual networks, I’d need a Juniper MX with at least 4 10G ports and a multi-service card (MS-MIC, MS-MPC, or MS-SPC3) installed. I might be able to get that working for under $5k if I was willing to live with borderline obsolete hardware that draws 1 kW. Maybe. So: no.

- I keep hitting weird bugs. The latest: NFX250s don’t respond to IPv6 pings with a sequence number between 35000 and 35999, inclusive. I’ve tried multiple devices and multiple JunOS versions. IPv4 ICMP is fine.

- Juniper claims that “Chassis Cluster” mode works on NFXes, but every time I’ve tried it’s failed spectacularly. I literally don’t think it’s even been tested.

- According to Juniper, they’re only good for 2-4 Gbps of routing, and they only have 2 10G ports. I want to be able to route 10G of traffic. The NFX250 hardware is capable of it (and Juniper says that the MX150, which is the exact same hardware, can do 20 Gbps), but the NFX stack is too cumbersome to actually move that much traffic.

- And possibly worse: they’re slow. I don’t mean packet

processing–I mean the CLI. It crawls. Running

committakes 10+ seconds. Doing something simple likerun show lldp neighborsfrom inside of config mode takes forever.

In the end, I pulled my old VyOS router out of storage and put it back in the rack. It’s a 1U SuperMicro box with 3x 100G and 2x 25G interfaces, and a Xeon E5-2683v4 CPU. That’s a similar vintage to the CPU in the NFX250, but a few notches up the ladder. They’re both Intel Broadwells, but the E5 has 16 cores (vs 6 in the NFX) at 2.1-3 GHz (vs 2.2-2.7 GHz) and twice the memory bandwidth.

I connected the “new” router between the pair of Aristas with 40 GbE and added it to the VxLAN fabric. It can talk to my “ISP” VLAN, the guest WiFi VLAN, and the IOT VLAN over VxLAN, and everything else arrives/leaves via one of the L3 links (with ECMP). It was all up and running in an hour or so. I copied the firewall and NAT configs over from the NFXes (different syntax, but the same basic config model), and that was that.

Since I’m only routing with VyOS, and it doesn’t try to bridge any networks, the issues that I was having with STP before don’t apply.

10 Gbps to the Internet

I’ve had 1 Gbps Internet service at home since early in the pandemic. My average Internet usage is low, but there are spikes where I’m stuck waiting for hours for data to transfer (uploading big videos, installing games, etc). In addition, I’ve had “business” service from my ISP for years, because that was the only way to get a static IP address out of them, but I was paying >2x as much as I’d have paid for residential service with the same speed, and they’d capped out business plans at 1 Gbps, while they’d kept cranking residential speeds up.

As of late 2023, they’ll sell you 2 Gbps, 5 Gbps, 10 Gbps, or 50 Gbps services for home, but only 1 Gbps for businesses. The 2 and 5 Gbps plans are basically the same as their existing offerings–they use some flavor of PON that shares bandwidth between neighbors, but the newer 10G and 50G services are just Ethernet. They patch your fiber strand straight back to a switch in their local POP, and give you static IPv4 and IPv6 addresses.

I took advantage of a couple weeks of downtime (no working from home because work is closed for the holidays) to upgrade to 10G service.

It’s somewhat surprisingly better than I’d expected. My ping times dropped from ~3 ms to ~1.2 ms, and Speedtest results got way more consistent. Functionally speaking, it’s more than 10x as fast, at least if you use Speedtest as your benchmark for speed. I rarely saw full-speed results before, and now it’s almost always 9.4 Gbps+. Unfortunately, the rest of the Internet isn’t quite fast enough to keep up, but I’ve seen 3.1 Gbps downloads from Steam, and it’s almost always over 2 Gbps. Best of all, there doesn’t seem to be any latency hit at all until the link is >90% full. Even with a full-speed Speedtest run I only see an extra couple ms of latency. So even giant downloads won’t impact people’s ability to video conference, etc.

Performance

I was somewhat concerned that my router wouldn’t be up to handling 10 Gbps, even though math suggested that it should be fine. I know that Linux on modern PC hardware is capable of doing wire-rate 100 GbE with 64-byte frames, but that’s using DPDK and/or VPP and effectively rolling your own IP stack. VyOS doesn’t do that (yet), and relies on the kernel, which is much slower.

On top of that, I’m using VxLAN, which is (potentially) going to slow everything down a bit. I say potentially because I’m using Mellanox NICs which can do hardware VxLAN offloading, but it’s not obvious if that’s in use, or if VyOS can even support it without a custom kernel.

My router is fairly beefy, but probably not what I’d pick if I was buying new hardware for this purpose today. It’s very capable, but not really optimized for this use and draws more power than newer hardware would use. In an ideal world I’d have fewer, faster cores, but it wasn’t worth spending the money when I had a workable system right at hand.

Running Speedtest, I routinely see around 9.3 Gbps up and down. While it’s running, the system is handling around 800 kpps with 100k interrupts per second and 160k context switches. It looks like it’s peaking with about 2 cores’ worth of IRQ CPU use according to atop. It’s not very evenly distributed, and I’m routinely seeing one core hit 40%, so I could probably handle 25 Gbps of Speedtest traffic on this system with no tuning. Assuming that there aren’t hidden constraints, cache bandwidth issues, etc.

Note, this is probably because Speedtest is only using a very small number of TCP connections (1?), and each TCP connection’s traffic gets hashed onto a single CPU core by the NIC’s interrupt steering setup. There’s a very good chance that this system could handle more than 25 Gbps if it’s spread across a large number of flows, because the traffic will be better-distributed across CPU cores.

For the most part, software routing performance is a function of the number of packets per second handled, not the number of bytes. Small (64-byte) packets are only slightly cheaper than large (1500-byte) packets.

I’m seeing around 800,000 packets per second (PPS) now, which is about right for a 10G pipe full of large packets. I’d be surprised if I couldn’t get at least 2.5x that worth of small packets in a single flow, or about 2 MPPS. That’s roughly 1 Gbps of tiny packets, which isn’t terrible. A real hardware router would be faster, as would anything based on DPDK, but it’s more than any reasonable residential use case that I can come up with. And that’s mostly just using 2-4 CPU threads. With multiple flows, the system can probably handle around 10 Gbps of 64-byte packets, although it’d be close.

Interestingly, the power used by this system varies widely depending on the amount of traffic handled. It draws around 90W at idle (newer hardware would be much lower, but fortunately I live in an area with cheap, clean power), and spikes up an extra 50W while Speedtest is running. None of the other network devices in my system show any particular change in power use.

Reliability

So, I’ve been using EVPN-VxLAN for over a year now, how has it went?

Perfectly.

I had a few weird teething problems because I didn’t fully undertand it and had skipped a few “I don’t need those” configuration steps when it turned out that yes, I really did need them. Other than that, it’s been utterly solid.

I don’t think I’ve had a single network reliability issue since I cut over. Everything has Just Worked. I’m able to reboot the Aristas one at a time for (rare) upgrades, and the only impact is ~half of my bandwidth going away for a few minutes.

All in all, I’m a giant fan of getting rid of STP entirely and using EVPN-VxLAN. It’s harder to get started and you need slightly more modern hardware, but once you do it’s just bulletproof.